Experts reveal the challenges of audio deepfakes and the ongoing battle against misinformation.

For some time now, artificial intelligence (AI) has been capable of mimicking human speech. But what happens when it becomes indistinguishable from the real thing? With advanced software, anyone can create AI-generated audio that convincingly reproduces human voices, a phenomenon known as ‘audio deepfake.’

This technology isn’t new; many of us have interacted with it through GPS apps, Google Translate, or voice assistants like Siri. However, the ability to generate audio that specifically sounds like someone we know is relatively novel—and troubling.

Imagine the potential for deception when someone can slip into a new identity using a convincing synthetic voice. In fact, I tested several audio generators available online, producing clips that could impersonate various individuals. The results were surprisingly effective, leading to concerns about the implications of such technology.

Audio deepfakes can easily be employed for malicious purposes, including serious fraud. As these tools become more accessible, it becomes increasingly difficult to discern what is real from what is not. This is where experts like Dr. Tan Lee Ngee play a crucial role.

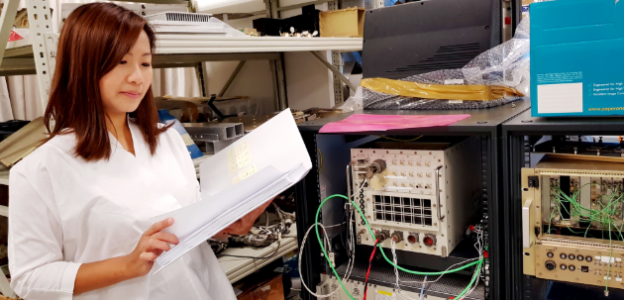

Dr. Tan is a Principal Researcher in the Sensors Division of DSO National Laboratories, Singapore’s leading defense research organization. With over 1,600 scientists and engineers dedicated to safeguarding the nation, she leads research efforts to detect audio deepfakes and develop AI tools that assess the authenticity of audio information. In essence, she is fighting AI with AI.

Understanding Deepfakes

Dr. Tan articulates her work with clarity and patience, breaking down complex concepts into understandable terms. “Familiarity with the speaker can often help in identifying whether audio is real or fake,” she explains. Yet, she acknowledges that distinguishing between the two can be challenging.

Audio deepfakes can be created from scratch, altering both audio and visual elements. This capability poses significant challenges, as seen in the viral TikTok videos that misrepresented public figures or manipulated footage during critical global events.

The ramifications of synthetic media extend far beyond simple pranks; they can incite misinformation and manipulation. According to a 2020 study by the Institute of Policy Studies, many Singaporeans struggle to differentiate between falsehoods and truths, especially among older demographics who may trust online media more readily.

“It’s challenging to change people’s views,” Dr. Tan remarks. “Our experiences and values can heavily influence our beliefs, making it hard to reconcile differing perspectives—even when presented with compelling evidence.”

The Rise of Audio Deepfakes

The rapid advancement of deepfake technology has taken many by surprise. “Just five years ago, few would have considered audio deepfakes a genuine threat,” Dr. Tan reflects. At that time, synthetic speech lacked the quality needed to deceive listeners effectively.

Recent progress in AI research has democratized access to deepfake technology, enabling anyone with internet access to create convincing audio manipulations.

Dr. Tan’s interest in this field wasn’t a conscious choice; she feels it chose her. A lifelong passion for mathematics and science led her to DSO, where she pursued a PhD in electrical engineering. Her expertise in signal processing—analyzing and manipulating audio data—naturally evolved into deepfake detection.

Protecting Against Manipulation

Dr. Tan’s work demonstrates how easily AI can be deceived. Jansen Jarret Sta Maria, an AI scientist and colleague, explains the concept of “adversarial noise,” which can disrupt AI functionality. For example, if an attacker alters a stop sign, a self-driving car may fail to recognize it, leading to dangerous consequences.

“The reality is that all AI systems are vulnerable,” Jansen says. “Our job is to make them resilient against these threats.” His enthusiasm for his work is evident as he describes the challenges of protecting AI systems from manipulation.

The landscape of misinformation is constantly evolving, and attackers are continually devising new methods to exploit vulnerabilities. Jansen emphasizes the importance of staying proactive in this ongoing battle.

A Call for Vigilance

While experts like Dr. Tan and Jansen work on the frontlines of this invisible war, the average person can contribute by developing critical analysis skills. By questioning the sources of information and recognizing potential biases, individuals can protect themselves against misinformation.

Dr. Tan and Jansen’s insights reveal both the potential and challenges of AI technology in an unpredictable world. The tools they develop aim to create a safer information environment, but the responsibility also lies with us as consumers of information.

Jansen concludes with reassurance: “Although the public may feel helpless, our work is dedicated to helping navigate these challenges.”